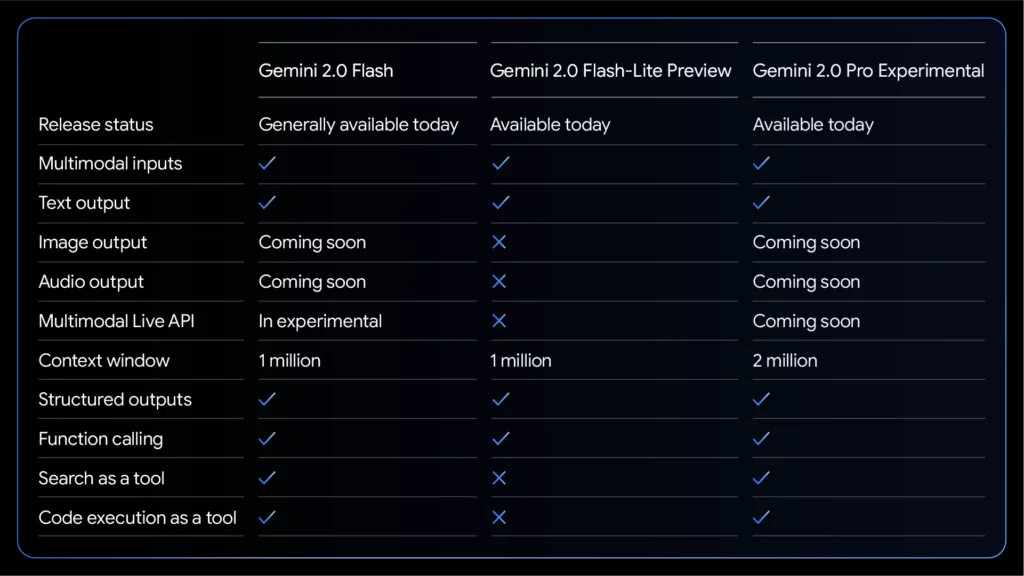

Google has made the Gemini 2.0 Flash model generally available, after launch it in Preview mode for developers in the AI Studio on an experimental basis.

The move announced by the tech giant’s CEO Sundar Pichai on X would allow developers to build production applications through AI Studio or Vertex AI.

Moreover, there’s a new model on the block: the Gemini 2.0 Flash-Lite, which is in public preview, promising efficiency and capability at a lower cost.

Pichai also announced the Gemini 2.0 Pro experimental version, boasting superior coding proficiency and handling of complex prompts.

This is accessible to Gemini Advanced users and aims to push boundaries in coding performance and complex tasks.

The advanced thinking model, 2.0 Flash Thinking, becomes available to all Gemini users.

This experimental version supports multimodal capabilities and integrates with Google apps like YouTube and Maps, positioning it as an all-encompassing tool for diverse AI applications for those who heavily use Google products.

The move is a statement in the AI reasoning competition. Firms like OpenAI and DeepSeek are already advancing their models with impressive feats in speed and cost-effectiveness.

DeepSeek has grabbed the attention of the world with its R1 reasoning model that outperformed OpenAI’s o1 at a fraction of the costs.

OpenAI’s o3-mini model brought competition with its free availability and enhanced capabilities that furthered the benchmarks, albeit with some server stress.

Planning your financial journey can be daunting but it doesn't have to be. Fire Fast by Dzambhala helps you understand and plan effectively.

Join the vibrant privacy-ensured Dzambhala community on

![]()

Want to give feedback on this story? Write to us.